Every time a new technology emerges, the conversation always seems to jump to the same question: Can it replace humans? When it comes to AI in mental health, folks have been asking that same question for the last couple of years... And that’s not just the wrong question, it’s a distraction from what actually matters (and what's actually doable/ethical)! AI can be incredibly useful, but the goal shouldn't be replacing therapists. The goal should be making therapists' jobs easier so they can focus on what they do best.

Think about robotic-assisted surgery. (And yes, I had to do some research, because I did not know these facts off the top of my head.) The first use of a surgical robot was back in 1985, when a robotic arm helped perform a neurosurgical biopsy. In 2000, the FDA approved the "da Vinci Surgical System" (not invented by da Vinci himself, sorry), allowing for more precise and minimally invasive procedures. And yet, TWO DECADES LATER, surgeons are still in the room, still the ones making the decisions, still the professionals responsible for patient care. No one seriously argues that robots should replace them entirely. Instead, the technology exists to assist, not to take over.

Think about robotic-assisted surgery. (And yes, I had to do some research, because I did not know these facts off the top of my head.) The first use of a surgical robot was back in 1985, when a robotic arm helped perform a neurosurgical biopsy. In 2000, the FDA approved the "da Vinci Surgical System" (not invented by da Vinci himself, sorry), allowing for more precise and minimally invasive procedures. And yet, TWO DECADES LATER, surgeons are still in the room, still the ones making the decisions, still the professionals responsible for patient care. No one seriously argues that robots should replace them entirely. Instead, the technology exists to assist, not to take over.

Now imagine a world where some startup decides you should be able to perform surgery on yourself. They ship out at-home robotic surgery kits, slap an AI-powered voice assistant on top, and expect you to take care of your own appendectomy. (I'm imagining Alexa chiming in with "what organ would you like to remove today?") It would be absurd. Because no matter how advanced the technology gets, there are things that simply require a trained professional.

Now imagine a world where some startup decides you should be able to perform surgery on yourself. They ship out at-home robotic surgery kits, slap an AI-powered voice assistant on top, and expect you to take care of your own appendectomy. (I'm imagining Alexa chiming in with "what organ would you like to remove today?") It would be absurd. Because no matter how advanced the technology gets, there are things that simply require a trained professional.

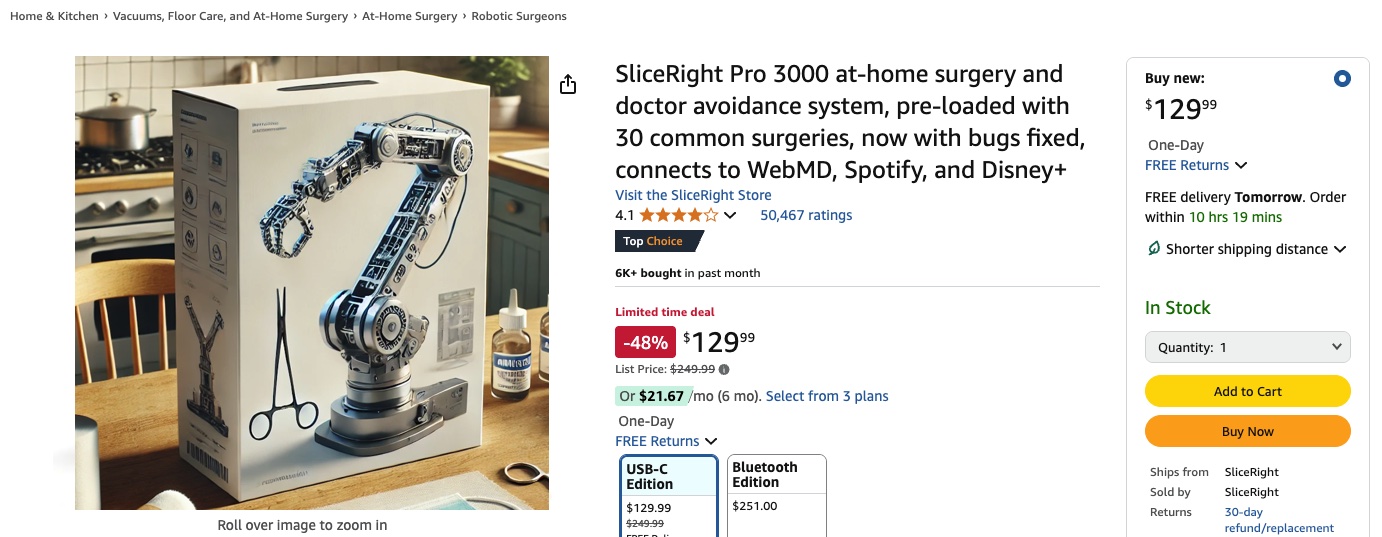

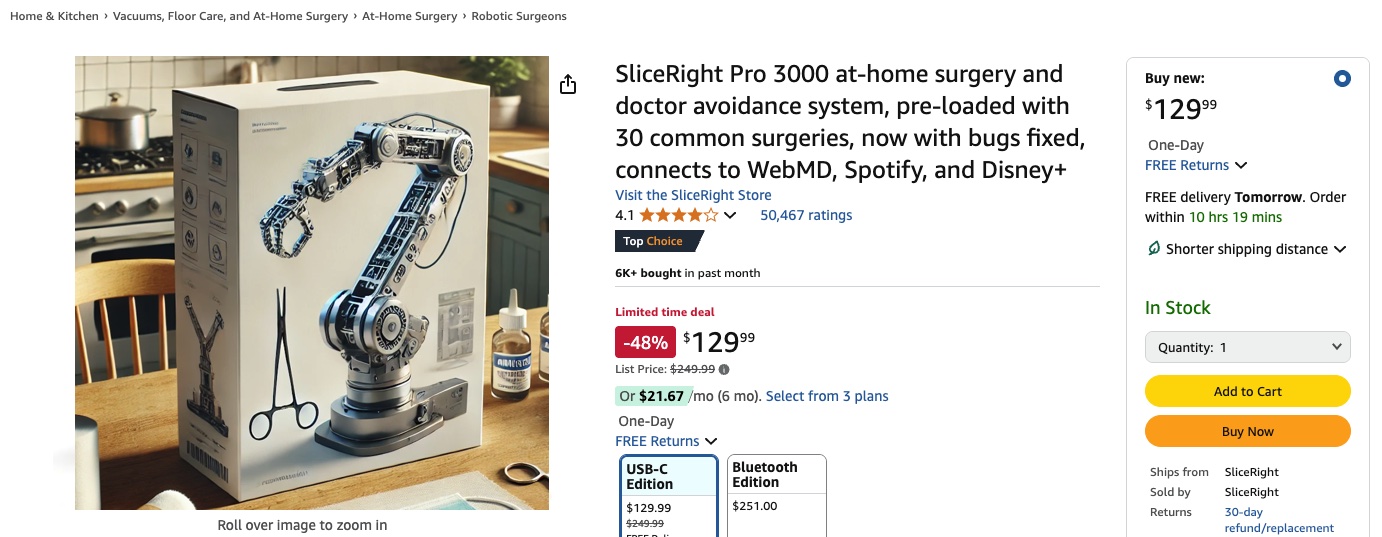

What if you went shopping online and saw this:

Ridiculous, right? (And also ridiculous is the amount of time Jon spent creating that fake product listing...)

Mental health is no different. AI can help handle the tedious stuff that's involved with therapy -- documentation, scheduling, paperwork -- but it can’t replace the expertise, intuition, and human connection that therapists bring to their work. It’s not supposed to. And we shouldn't be trying to force it to -- or force people (therapists and clients alike) into accepting this idea. At Quill, that’s exactly how we approach AI: as a tool to make therapists’ lives easier, not as a replacement for the actual work of therapy.

Mental health is no different. AI can help handle the tedious stuff that's involved with therapy -- documentation, scheduling, paperwork -- but it can’t replace the expertise, intuition, and human connection that therapists bring to their work. It’s not supposed to. And we shouldn't be trying to force it to -- or force people (therapists and clients alike) into accepting this idea. At Quill, that’s exactly how we approach AI: as a tool to make therapists’ lives easier, not as a replacement for the actual work of therapy.

So instead of asking "Can AI replace therapists?", we should be discussing and answering the question "How can AI help therapists?" (Or in general, "How can we as a society help therapists?" Replacement seems like... the opposite of helping them.)